What is Mixture of Experts (MoE)?

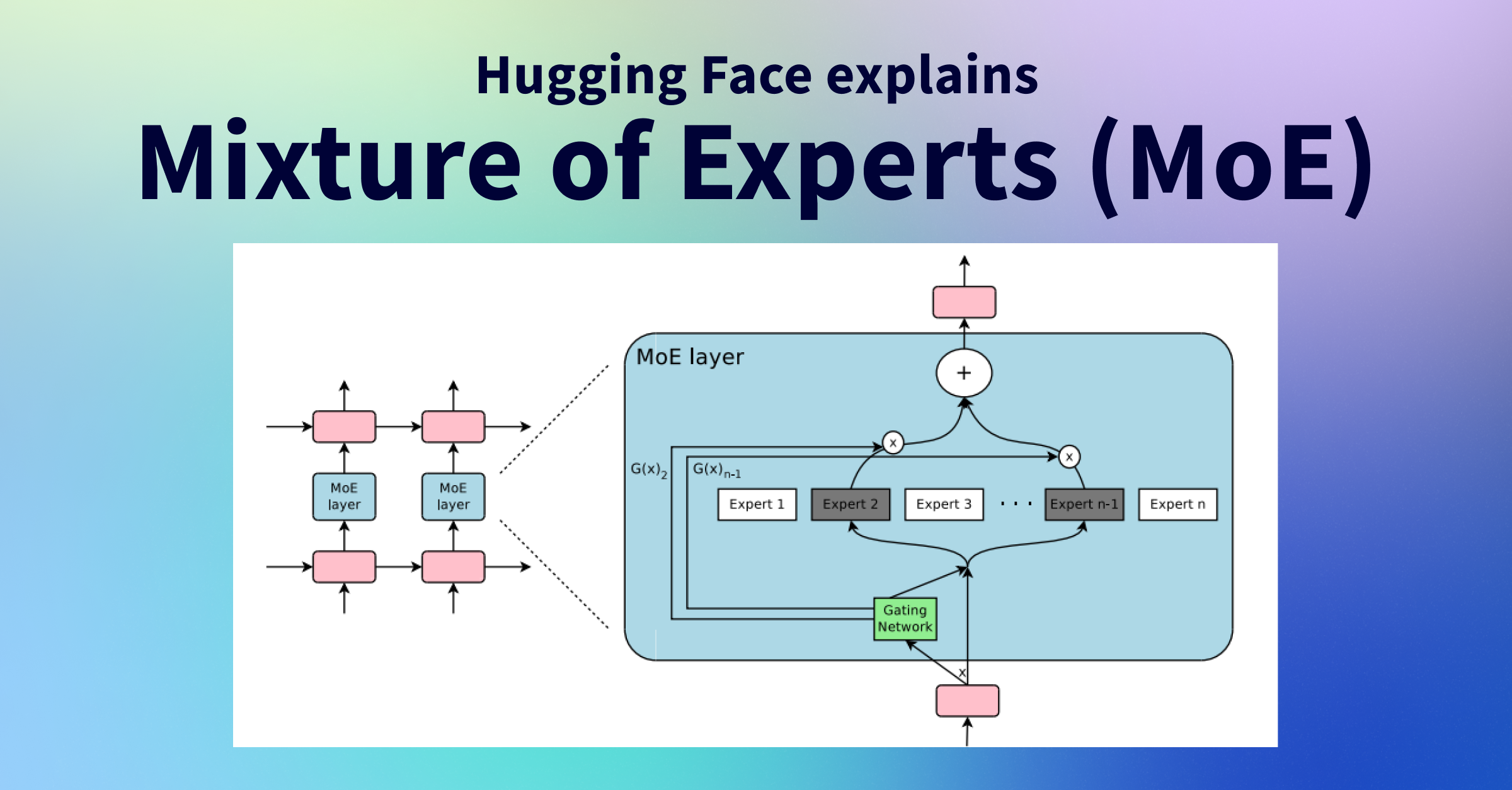

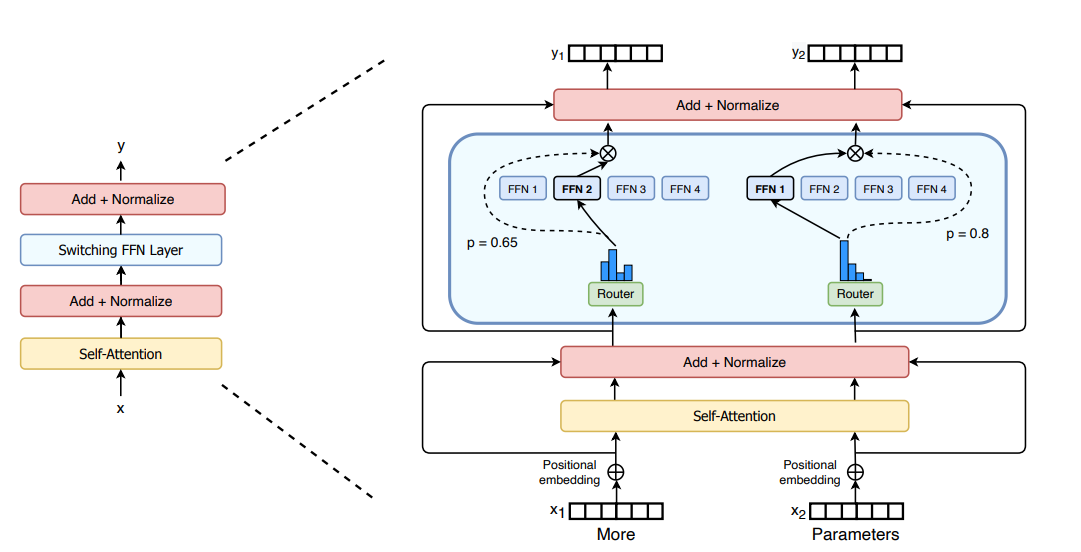

Mixture of Experts (MoE) is a neural network architecture where instead of a single dense model, the network consists of multiple sub-models known as "experts." Each expert specializes in processing different parts of the input.

In the context of Large Language Models (LLMs), MoE replaces the traditional dense feedforward layers with a set of expert networks, allowing the model to have a much larger parameter count while keeping computation costs manageable.

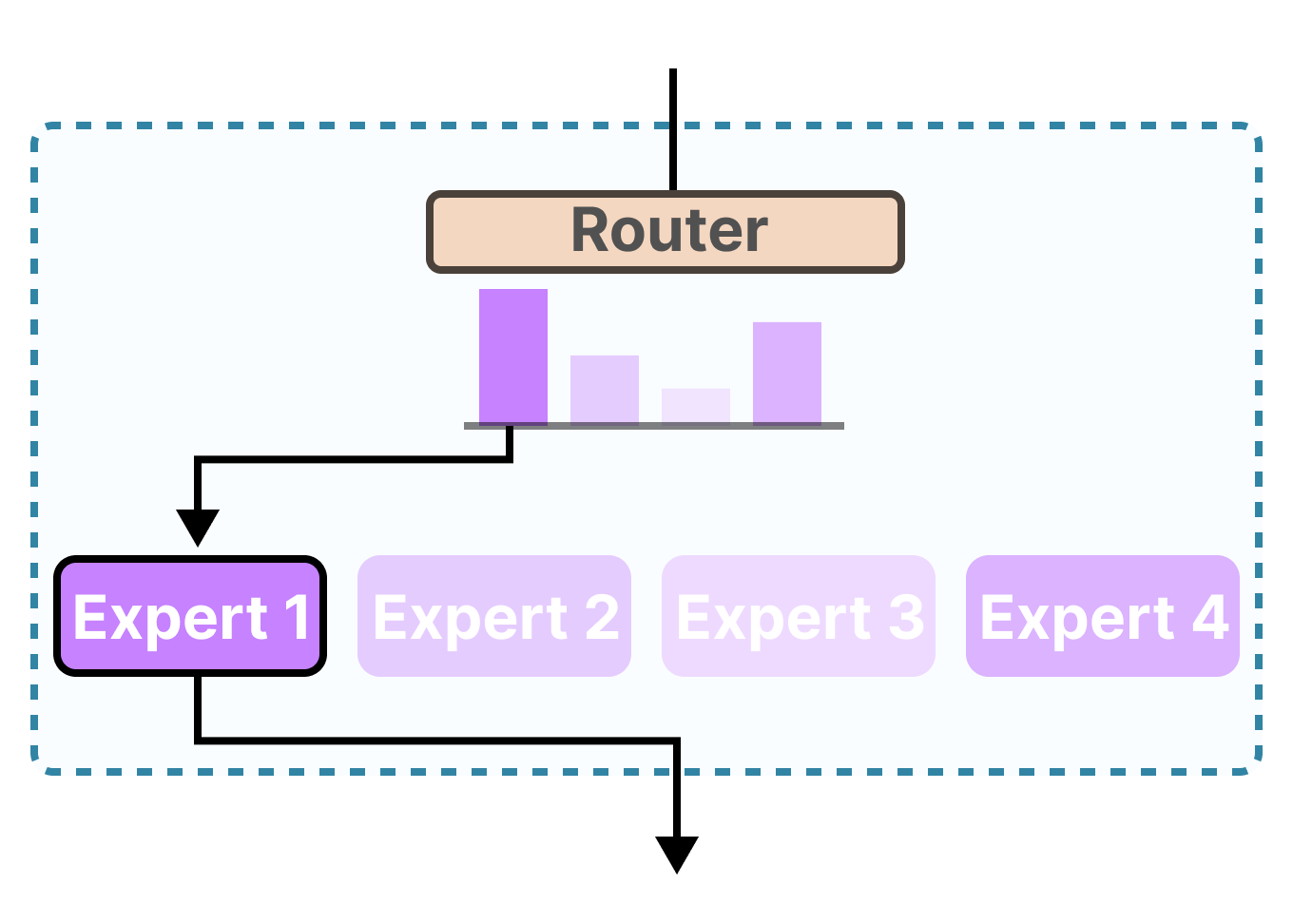

The key insight of MoE is that for any given input token (like a word or part of a word), only a small subset of the model's parameters need to be activated. This selective activation is managed by a "router" or "gating network" that decides which expert(s) should process each input token.

"MoE enables models to be pretrained with far less compute, which means you can dramatically scale up the model or dataset size without a proportional increase in training costs." — Hugging Face Blog

How MoE Works in LLMs

Step-by-Step Process

- 1. Input Processing: The input token (or its embedding) enters the MoE layer within the transformer architecture.

- 2. Router Evaluation: A router (gate network) computes a probability distribution over all available experts using a softmax function to determine which experts are most suitable for this particular input.

- 3. Expert Selection: Based on these probabilities, the top-k experts (commonly just 1 or 2) are selected to process the token.

- 4. Parallel Processing: The selected experts process the token in parallel and generate their respective outputs.

- 5. Output Aggregation: The outputs from the active experts are weighted by the router's selection probabilities and combined to form the final output for that token.

- 6. Forward Propagation: The combined output is passed to subsequent layers in the model's architecture.

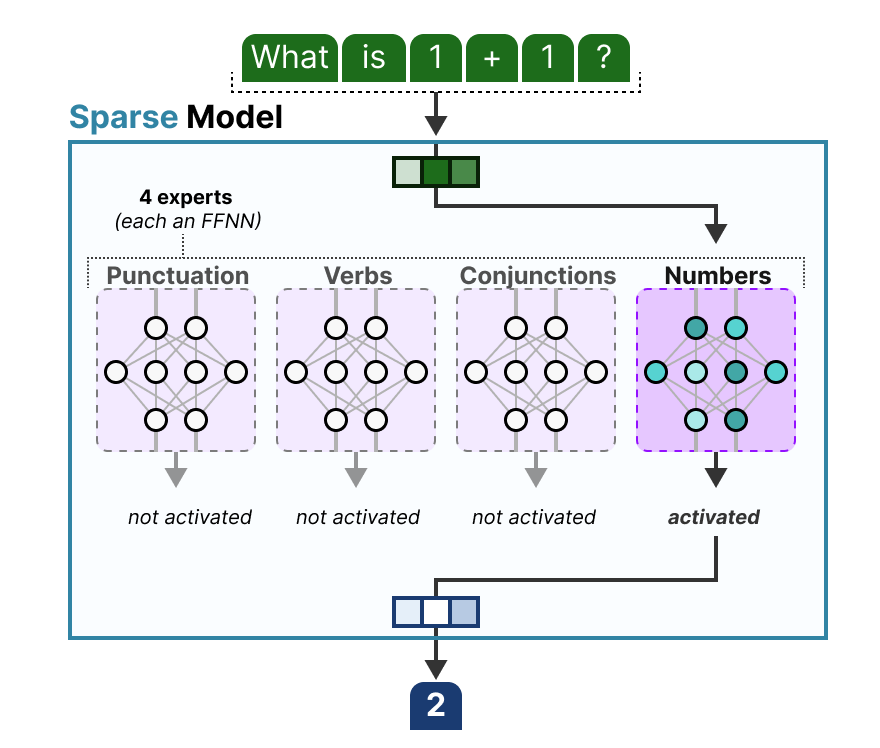

This mechanism allows the model to have a massive number of parameters (sparse parameters) but use only a fraction of them (active parameters) at inference time, leading to more efficient use of computational resources while scaling up model capacity.

Key Components of MoE

Experts

Individual sub-models, typically implemented as feedforward neural networks. Each expert learns to specialize in processing particular types of inputs or linguistic patterns, enabling more fine-grained representations.

Experts in an MoE architecture are analogous to specialists in different fields. For example, some experts might become skilled at processing mathematical content, while others might specialize in creative writing or scientific terminology.

In most MoE implementations, the experts have identical architectures but differ in their learned parameters. During training, experts naturally develop specializations through the routing decisions made by the gating network.

Router/Gate Network

The component responsible for selecting which expert(s) should process each input token. It computes a probability distribution over all experts and routes tokens to the most appropriate specialists.

The router determines the routing probabilities by computing the similarity between the input token embedding and a learned weight matrix. These probabilities are then used to select the top-k experts for processing.

Different routing strategies exist, including "top-1" routing (where only the single most appropriate expert is selected) and "top-k" routing (where multiple experts are selected with different weights). The routing decision significantly impacts both model performance and computational efficiency.

Load Balancing Mechanisms

Techniques that ensure tokens are evenly distributed across experts, preventing some experts from being overused while others remain undertrained. These are crucial for MoE training stability.

Common load balancing techniques include:

- Auxiliary Loss: An additional training objective that penalizes uneven routing distributions

- Expert Capacity: Limiting the number of tokens each expert can process in a batch

- KeepTopK Method: Enforcing a more balanced token distribution across experts

Without proper load balancing, MoE models can suffer from "expert collapse," where the router sends most tokens to just a few experts, effectively reducing the model to a much smaller one.

Advantages of MoE

Improved Scalability

MoE allows models to incorporate billions of parameters while activating only a small subset during inference. This enables scaling to much larger model sizes without proportional increases in computation.

Computational Efficiency

By selectively routing tokens to the most relevant experts, MoE reduces redundant computations that occur in traditional dense models, leading to faster inference and reduced energy consumption despite a larger overall model size.

Specialized Knowledge

Each expert can develop specialized knowledge for particular domains or linguistic patterns, allowing the model to maintain both breadth and depth of understanding across diverse topics.

Training Efficiency

MoE models can achieve the same quality as dense models with significantly less compute during pretraining, enabling more efficient use of resources when developing new models.

Flexible Resource Allocation

The MoE architecture allows for dynamic allocation of computational resources to different parts of the input, focusing computing power where it's most needed for each specific token.

"Mixture of Experts enable models to be pretrained with far less compute, which means you can dramatically scale up the model or dataset size without a proportional increase in training costs."

— Hugging Face Blog

Comparison: Dense vs. MoE Model

| Metric | Traditional Dense Model | MoE Model |

|---|---|---|

| Parameters | All parameters used for every token | Only selected expert parameters used per token |

| Memory Usage | Proportional to model size | High (all experts loaded in memory) |

| Inference Speed | Slower for larger models | Faster for same parameter count |

| Training Compute | Higher for same quality | Lower for same quality |

| Specialization | General knowledge distributed across model | Specialized knowledge in different experts |

Real-world Implementations

MoE architecture has been successfully implemented in several prominent large language models, each with unique approaches and optimizations:

Mixtral 8x7B

Developed by Mistral AI, Mixtral uses 8 expert models with a sparse-MoE architecture. For each token, the model selects the 2 most relevant experts out of 8 available ones.

- ✓ Total of 46.7B parameters but only uses ~12.9B per token

- ✓ Outperforms much larger dense models in benchmarks

- ✓ Efficient inference with top-2 expert routing

Mixtral is based on the Mistral 7B architecture but incorporates MoE layers that route tokens to 2 of 8 experts. This allows it to achieve performance comparable to much larger models while maintaining reasonable inference costs.

The model demonstrates the efficiency of MoE by achieving state-of-the-art results on various benchmarks while keeping active parameter count low. It utilizes token-by-token routing decisions, allowing for context-dependent expert selection.

Read more

GPT-4

According to reports, GPT-4 implements a form of MoE by using 8 smaller expert models rather than a single monolithic network, enabling improved performance and specialized knowledge.

- ✓ Multiple expert models each specializing in different domains

- ✓ Reported to have 8 expert models of ~220B parameters each

- ✓ Coordinator system manages routing between experts

While OpenAI has not officially confirmed the exact architecture, reports suggest that GPT-4 uses a mixture of experts approach to improve both performance and inference efficiency.

The reported architecture involves multiple specialist models coordinated by a central routing system, allowing for domain-specific expertise while maintaining generalist capabilities. This structure is believed to contribute to GPT-4's strong performance across diverse tasks.

Read more

Switch Transformer

Developed by Google Research, Switch Transformer pioneered the simplified MoE approach with top-1 routing, where each token is processed by only a single expert.

- ✓ Simplified routing with one expert per token

- ✓ Scales to trillion-parameter models

- ✓ Reduced communication overhead compared to other MoE approaches

Switch Transformer introduced several key optimizations to the MoE approach, including a simplified routing mechanism that selects only one expert per token, reducing communication costs and computational complexity.

The model also employs techniques such as selective precision (using lower precision for expert computation) and expert capacity factors to improve training stability and efficiency. These innovations allowed Switch Transformer to scale to over a trillion parameters while maintaining reasonable training costs.

Read moreOther Notable MoE Implementations

| Model | Organization | Key Features | Year |

|---|---|---|---|

| GShard | Google Research | Top-2 routing, alternating MoE layers, expert capacity factor | 2020 |

| GLaM | Google Research | 1.2T parameter MoE with 64 experts per layer, 95% reduction in training compute | 2021 |

| NLLB-MoE | Meta AI | Multi-language translation model with 1.5B parameters, 128 languages support | 2022 |

| DeepSeek-MoE | DeepSeek | Open-source 16B parameter MoE model with strong mathematical reasoning | 2023 |

Technical Challenges

Training and Inference Challenges

High Memory Requirements

Although MoE models activate only a subset of parameters during inference, all experts must be loaded into memory. This creates high VRAM requirements that can limit deployment options.

Training Instabilities

The routing mechanisms in MoE architectures can lead to training instabilities. Additional losses (like auxiliary load balancing loss and router z-loss) are often required to stabilize training.

Fine-tuning Challenges

MoE models tend to overfit during fine-tuning more quickly than dense models. They require different hyperparameters and sometimes selective parameter updating to prevent overfitting.

Architectural Challenges

Load Balancing

Ensuring that tokens are evenly distributed among experts is non-trivial. Uneven routing can lead to underutilization of some experts while overloading others.

Communication Overhead

In distributed training settings, MoE architectures require significant communication between devices as tokens are routed to experts that may be on different hardware. This can become a bottleneck.

Expert Capacity Planning

Determining the appropriate number of experts and their capacity is crucial. Too few experts can limit model capabilities, while too many can lead to inefficient resource utilization.

Solutions and Mitigations

For Training Stability

- Auxiliary load balancing loss to encourage even token distribution

- Router z-loss to prevent large routing logits and instability

- Selective precision techniques (using lower precision for expert computation)

- Dropout and regularization strategies specifically designed for MoE

For Deployment Efficiency

- Expert parallelism across multiple devices

- Quantization techniques to reduce memory footprint

- Expert pruning to remove redundant or underutilized experts

- Conditional loading of experts based on expected task demands

Research continues to develop improved solutions to these challenges, making MoE architectures increasingly practical for real-world deployment.

Future Directions

Hardware Optimizations

Future MoE implementations will likely focus on hardware-specific optimizations that minimize the memory and communication bottlenecks currently limiting deployment.

- Specialized accelerators for sparse matrix operations

- On-demand expert loading systems

- Distributed expert management frameworks

Advanced Routing Mechanisms

Research is exploring more sophisticated routing strategies that better understand the semantic content of tokens and make more informed expert selection decisions.

- Context-aware routing that considers token relationships

- Self-adjusting expert allocation during inference

- Hierarchical expert structures for multi-level specialization

Hybrid Architectures

Future models may blend MoE with other efficiency techniques to create hybrid architectures that overcome current limitations while preserving the benefits of expert specialization.

- Combining MoE with retrieval-augmented generation

- Integrating MoE with modular reasoning components

- Dynamic architecture adjustment based on input complexity

Emerging Research Trends

Continuous Expert Formation

Research into models that can dynamically create, merge, or prune experts during training based on observed data patterns, allowing for more flexible specialization.

Multi-modal Experts

Exploration of MoE architectures that handle different modalities (text, images, audio) with specialized experts for each type of data and cross-modal integration.

Fine-tuning Stability

Development of techniques to improve the stability and performance of MoE models during fine-tuning, addressing the overfitting challenges currently observed.

Cloud-native MoE Deployments

Systems for dynamically scaling MoE models in cloud environments, loading only the needed experts based on usage patterns and optimizing resource allocation.

As research in MoE architectures continues to advance, we can expect more efficient, scalable, and capable language models that leverage the power of specialized expertise.

Explore MoE in Your Projects

Mixture of Experts architectures represent one of the most promising directions for scaling language models efficiently. Stay updated with the latest research and implementations.